After a few weeks away, I’m back at my promised effort to investigate multiple questions about teaching, learning, and artificial intelligence. This week, we’ll see how the ways in which we talk about artificial intelligence reflect our notions (and misunderstandings) of human intelligence.

A magical intelligence in the sky?

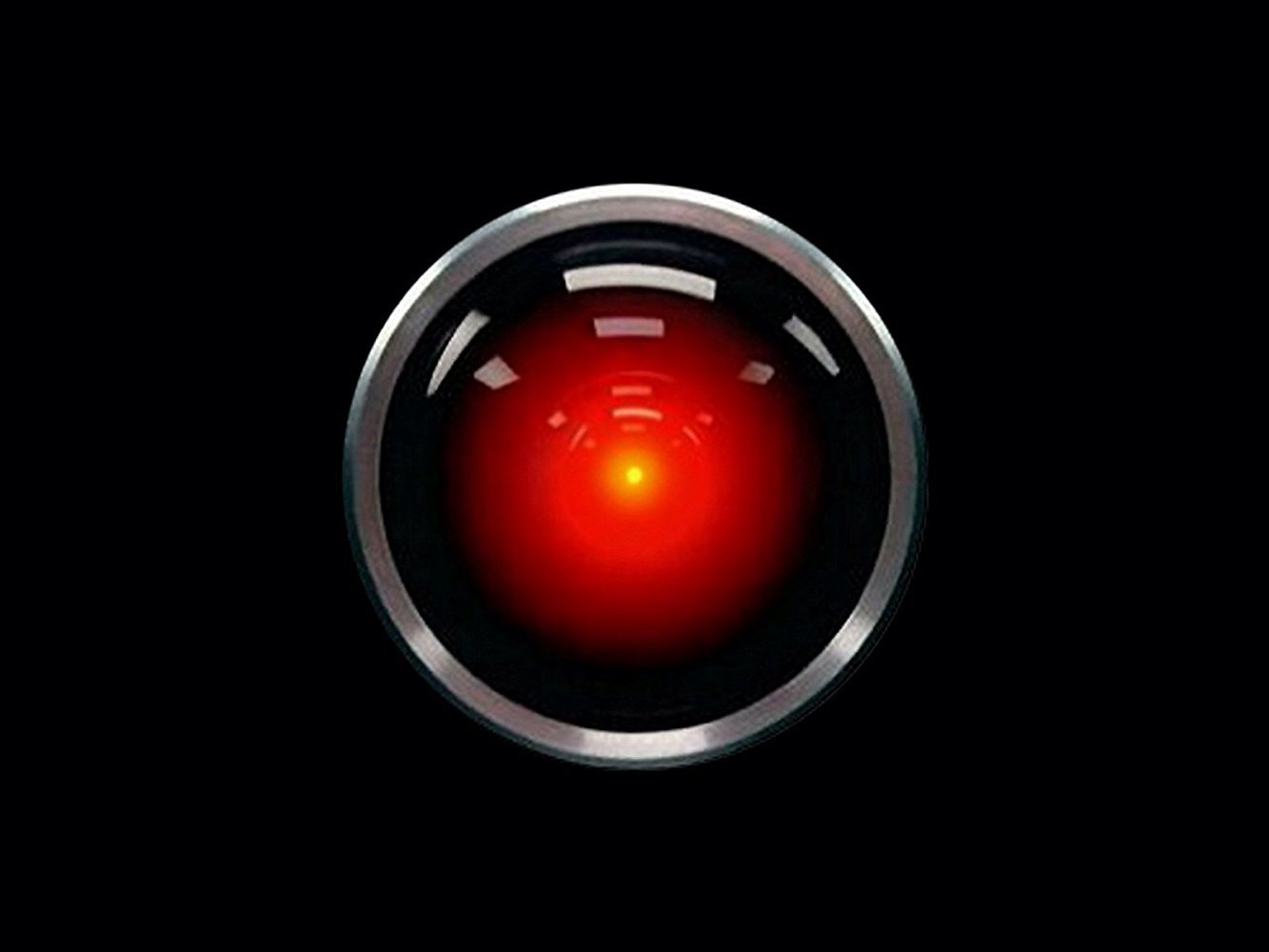

Recently, I listened to a podcast series called Good Robot, and the host noted that the rhetoric around AI can get … religious. Tech CEOs refer to ‘magical intelligences in the sky,’ and use the descriptor ‘superintelligent’ to describe (hypothetical) AGI: artificial general intelligence (it’s worth repeating that even the GoogleAI overview considers this to be a hypothetical version of AI). These CEOs stress the risk of apocalypse at the hands of a, yes, ‘superintelligent’ AI, using thought experiments like the paperclip maximizer (or, updated here as the squiggle maximizer). Focusing too much on a single task, it turns the whole world into paper clips (or squiggles).

With all of this breathless talk, it’s easy to forget that some of this is still hypothetical. Today, the tools at our disposal, while impressive, continue to generate ‘slop’ and ‘hallucinate’ articles/facts that don’t exist. There are problems of sycophancy (like a friend who calls your every idea great, whether it is or not) and AI image generators can produce what are clearly anatomical mistakes.

What do we misunderstand about AI when we talk about superintelligence, and how might this reflect our misunderstanding of human intelligence?

There’s clearly a gap between the pie-in-the-sky rhetoric of superintelligence and the impressive outputs of specific tools like ChatGPT. Yet, when we veer towards the overarching description of AI and leave behind specific functions, we commit a similar error as to when we bundle a range of human skills and abilities under the single label of intelligence (and especially when we try to use a single measure, like IQ, to be an ‘objective’ measure of this intelligence). For years, IQ was seen as a synecdoche for human intelligence, with the higher scores being reserved for the rare geniuses among us. Howard Gardner famously put forward his theory of multiple intelligences to counter this narrow (and culturally constructed) view of intelligence (though the theory of multiple intelligences is highly contested: at worst a myth, and at best misunderstood).

When we dig further into human intelligence, we find ourselves face to face with a quote from early psychologist Henri Wallon: Or, in the words of a more recent psychologist, Daniel Willingham, “Expertise is domain specific” (and predicated on inflexible knowledge). Is that the same thing as Gardner’s theory of multiple intelligences? This can lead to circularity, where a particular intelligence is defined by being ‘good’ at that thing, and being good at that thing is a sign of that particular intelligence… While a constellation of skills and abilities might be associated with general intelligence, our academic/artistic experiences display a range of ability and expertise that is idiosyncratic to particular disciplines and particular people (just as athletes at the Olympics (and Paralympics) have different bodies, often suited to their specific events).

Why does this misunderstanding matter?

So, what’s the problem here? Carol Dweck is famous for her work on the ‘growth mindset,’ the idea that intelligence is changeable through effort. What’s interesting is that some research suggests that exposure to the mindsets is enough to improve performance, and other research points to the importance of instructors believing their students can learn and improve. Does this mean by sheer grit and determination, we can make ourselves smarter? Not so fast, says Dweck herself: it’s not just about hard work, it’s about trying different strategies and approaches to the challenges in front of us. We can learn to get better at specific tasks. In a cheeky essay, Jarek Janio points out that while we’re willing to ‘engineer’ (adapt) our ChatGPT prompts to get an improved result, this ‘mindset’ does not always carry over to our students (link to source).

When we begin to break down a broad idea like ‘artificial intelligence’ into the automation of particular tasks, we begin to see something parallel to our understanding of learning as the result of wrestling with particular challenges: recalling information, applying it in novel situations, making sense of new challenges, taking what we know and using it to understand what we don’t (yet) know. We break down the larger task of getting ‘smarter,’ or becoming an ‘artist,’ into the manageable steps of learning a particular skill.

Conclusion

In what I hope will be a clear trend throughout this series, there are few productive/constructive ways of talking about artificial intelligence in higher education without interrogating our ideas about what learning is, what intelligence is, etc. (and why these things matter). Will we stick with fairy tales about ‘the magic superintelligence in the sky,’ or will we come back down to earth and start where we are (not where we could be at some undefined point in the future)?