Welcome to summer. Congratulations to this year’s graduates and the faculty, family, friends, and more who supported them along the way.

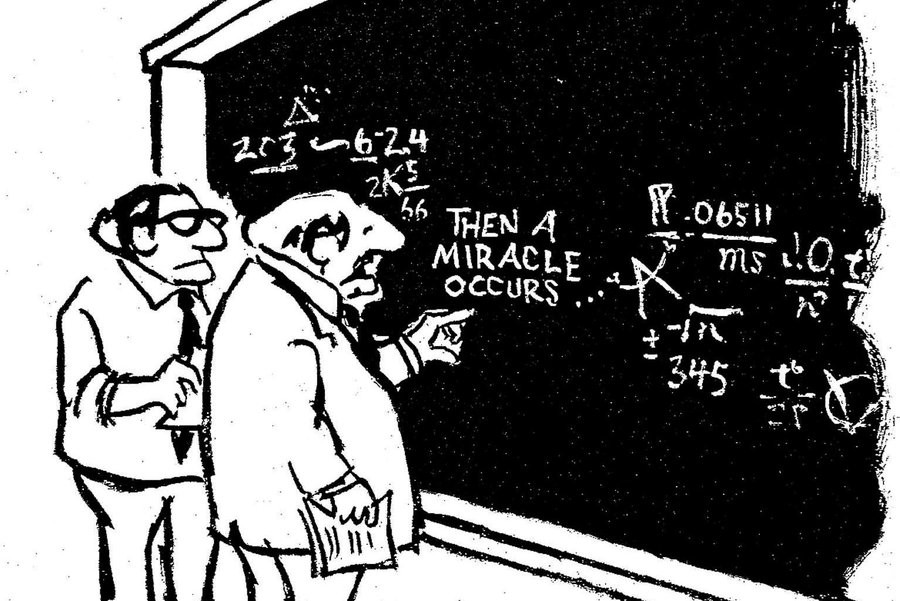

Looking ahead to summer, I’m wondering how we’re in a different place than we were last year. Surely, the role of Generative AI applications is not going away, and, yet, the discourse of how to respond is developing, fitfully but surely. I’ve been reading, thinking, listening to podcasts and audiobooks, talking to peers, and engaging with various takes on social media. While I’ve posted previously on Generative AI (such as The fantasy of frictionless learning), but, this summer, I plan on focusing on various angles that constitute something of a ‘teaching philosophy’ for me when it comes to Generative AI. I plan on focusing a series of posts on responses to Generative AI in teaching and learning, involving a range of angles:

- What do we misunderstand about Generative AI when we speak about superintelligence and/or fail to differentiate the tasks it’s being asked to do? Howard Gardner’s theory of multiple intelligences (and the observation that expertise is domain-specific) point us in the direction of more, not less, specificity when it comes to the uses AI is put to (just as it asks to be specific about the tasks we ask students to do).

- How do we make sense of the give and take of involving Generative AI in assignments? People talk loosely about ‘AI-generated feedback,’ but what tools or frameworks help us think through the tradeoffs, the give and take, and the possible lost opportunities for student learning when we engage with GenAI? For an idea, see ‘The Innovation Bargain’

- How do we make sense of academic integrity in light of generative AI? Tricia Bertram- Gallant, author of a recent book The Opposite of Cheating, encourages instructors to look at academic integrity as a teaching and learning (re: pedagogy) challenge just as much as a policy challenge. I’m reading the book this summer and will be happy to add thoughts, reactions, and ideas in response.

- I don’t like the phrase ‘silver lining,’ because this is a situation we didn’t ask to be in. At the same time, the opportunity to ask deep questions of our assignments (like Unicorse’s ‘Why should I care?’) and challenging ourselves to make assignments more authentic and meaningful is one that I’m happy to partner with you on. Transparent assignment design is a model whose importance has only grown with the emergence of ChatGPT.

- Finally, it’s understandable if we’d rather not engage with Generative AI in our courses. There are myriad ethical and privacy concerns, and some understandably see engaging with AI tools as tantamount to deskilling ourselves. If your stance is ‘no AI allowed’ and ‘business as usual,’ that’s your choice. My hope is that we can have a pluralistic space, yet I’m going to maintain that there is a cost to our sitting things out. I’ll give the final words to a colleague, Lew Ludwig, guest posting on Marc Watkins’ wonderful Substack:

“But, choosing not to engage at all, even on ethical grounds, doesn’t remove us from the system. It just removes our voices from the conversation… We lose ground we may never get back. And we miss the opportunity to help students learn not just how to use AI, but how to question it—how to stay human inside systems that weren’t built with their humanity in mind.”

This isn’t the only thing I’ll be thinking about this summer (I’ll also attend an annual conference on grading, a digital gathering for CTL staff, as well as a week-long online event focused on the role of AI in education), but it’s top of mind for many of us. I want to create programs and resources that I believe are useful and address the challenges we all face as educators. The mission of the CT&L is to lead with the available evidence, to frame challenges constructively, and to serve as a thought partner. Don’t hesitate to reach out, and have a good summer!